Mobile learning research to increase the evidence base for teaching strategies

Waiting at Leiden Central for your train, you quickly review what you just saw in class. How handy that it’s right there on your phone! A day later, the app’s smart algorithm sends a reminder and you review it again in line at Starbucks. Three days later? Again, waiting for your order at Julia’s.

A Comenius Leadership Fellow award

For two combined reasons, I am highly interested in the evidence base for teaching strategies. They are, simply put, that I believe that (1) using scientifically sound information leads to better decision making and (2) improving education is both a moral obligation and a highly rewarding activity. In this blog, I will focus on a recent attempt to expand the evidence base for teaching strategies in a world where nearly every student has a mobile phone, but few use it to actually learn for their study. The attempt is financed by an NRO Comenius Leadership Fellow Award from 2019. It was based on a proposal entitled “Mobile Learning”. Co-applicants were prof. dr. Han de Winde (FWN), prof. dr. Marco de Ruiter (LUMC), and dr. Maarten van de Ven (ICLON).

Incentives with unwanted pedagogical results

Many incentives have led to changes in our education system over the past decades. One that I disliked from the start is the penalizing of educational institutions when students take considerably longer than needed to finish their degree. Another was the removal of direct financial support to students while studying. Both incentives were probably assumed to lower the societal cost of education or perhaps improve education itself. I don’t believe they do. As a result of these and other quantity-based financial regulations as opposed to quality-based incentives, institutions want students to pass exams as quickly as possible, preferably in their first attempt, to reduce the average time that students need to finalize their education. To facilitate this, tricks are applied. More focused teaching with fewer courses running in parallel increases improves exam results. Also, breaking up study components into smaller pieces that are individually assessed increases the pass-fail ratio. The financial incentive for students stimulates that they care more about the rate at which they acquire credits than the rate at which they acquire the knowledge and know-how needed to partake in the job market as a professional.

Cramming as a major problem

Improper incentives lead to unwanted studying behavior. One of these is “cramming”. Caring mostly about passing the exam, students focus on the means to pass. “What I need to know to pass the exam?” surely surpasses “Do I understand and can I apply the content offered in this course?” these days. If passing requires (in part) knowledge by heart, cramming is highly effective. Cramming is the short-term memorizing by focused studying that students tend to do just prior to taking an exam. The student passes the exam the next day, but without repetition, the obtained knowledge is lost within days to weeks. Hermann Ebbinghaus showed this already over a century ago and various studies have confirmed it. Clearly, cramming is unwanted studying behavior. Educational programs will either have to take it into account and re-offer the same material repetitively if ‘by heart knowledge’ is considered important. Alternatively, they have to accept that students at the end of their program have little ‘by heart knowledge’. I don’t know anyone who agrees to the latter. I am also pretty convinced that everyone would like their family doctor to know what their particular ailment is called without having to look it up in a book. The same counts for their lawyer – who wouldn’t want them to know what part of the law applies to their situation with having to “Google it”? But this doesn’t stop at academic degrees leading to a professional license. Everyone student needs a certain amount of by heart knowledge.

Spaced repetition, the testing effect, and artificial intelligence

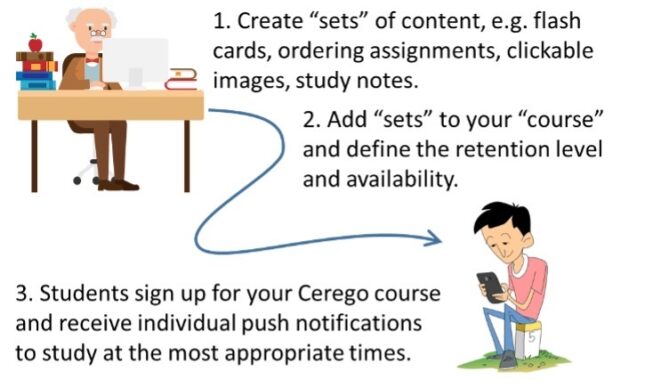

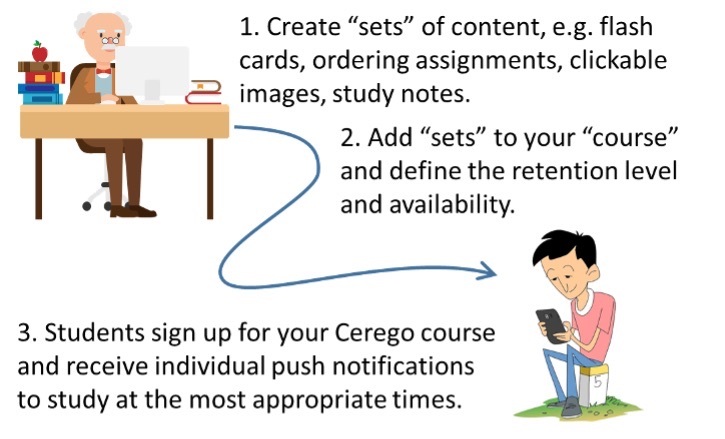

Long-term memorization benefits from repeated studying with appropriate times in between. The exact length of these periods depends on the particular student, what they studied last, and how they performed in the previous study sessions. Repeated (summative) testing rather than repeated reading also benefits building long-term memory. Spaced repetition and the testing effect are, therefore, two of the most important building blocks of a software package named Cerego. It combines these two ideas with an artificial intelligent (AI) engine that monitors the studying progress and behavior of each individual student. It does so to optimize retention of the study content. Push technology is used to inform students of the optimal time for a new study session and the AI engine selects what is best repeated at that time. Applied using an app that runs particularly well on mobile phones, Cerego claims to help combat cramming and instil better studying skills for our students. However, a well-constructed app that is based on accepted pedagogical principles may not have the desired effect if applied improperly. Similarly, a claw hammer may be used to hit steel nails into a wall, but it will not work if you use the wrong side of the hammer nor if the wall is made of steel. This is why we are interested in learning to apply this new mobile phone-based technology in our academic setting.

Our studies and results

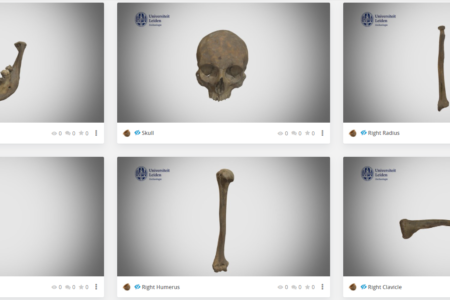

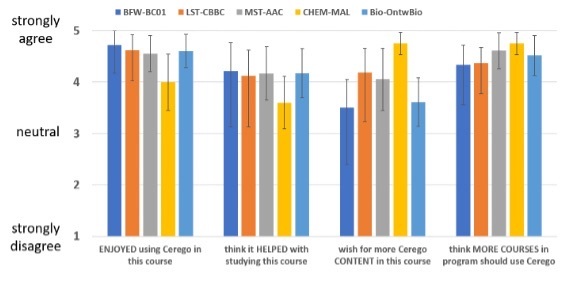

In the first year of our three-year project, we focused primarily on ‘how to implement'. We aimed to build experience by adding Cerego as a study means in introductory courses across different programs. For example, prof. dr. Marco de Ruiter and dr. Huub van der Heide used it to teach parts of anatomy in the Medical program at the LUMC. Dr. Christian Tudorache and I implemented Cerego in introductory Biology and Chemistry courses at the Faculty of Science. Beyond coping with GDPR legislation, we learned how to construct content and how to apply it to support students in their efforts to memorize study content. Student experience with Cerego was tested through short surveys. Students turned out to be very positive about the software package in general. On a Likert scale of 1 to 5, the last question asking for their agreement with the statement that Cerego should be applied in more courses of my academic program, most courses scored in the range of 4.3-4.8.

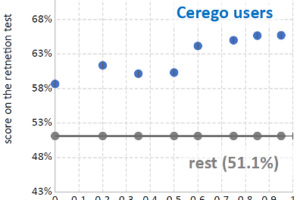

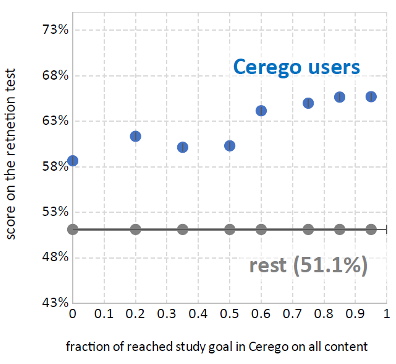

Positive student experience helped in expanding the users base. In the second year of the project, many more teachers and courses used Cerego. More data was collected on student experience with the same general result - students tend to love it. We also applied the first tests aiming to determine whether students voluntarily using Cerego show better retention. Applied after a two-quarter intermission, a chemistry test showed that students who used Cerego in Q1 performed better on the entire test in Q4 than students who did not use Cerego. The difference scaled roughly linearly with the effort students had put into using Cerego in Q1. At the same time, the study indicated that voluntarily subscription to use the app also creates a selection bias. Students that hardly used Cerego still scored significantly higher than those that did not even register for using the app. An ongoing study in this third year of our project aims to unravel the selection bias from learning effects.

The future

Students really appreciate this app. It facilitates using small pieces of ‘dead time’ in busy daily schedules by effective studying. The educators involved are also very positive about the software package, even without hard evidence that supports that mobile learning with Cerego improves retention. This may prove enough to pick up this (or a similar) soft package and add it to the tools in our digital learning environment. Some hurdles remain though, e.g. how to tackle financial costs. There are license fees, but also personnel costs for maintenance, costs for teachers or student assistants to create the study content within the app, teacher training, and so on. Also, some aspects of our studies so far have made us realize that we are nowhere close to maximizing the potential of ‘mobile learning’ in this way. For unknown reasons, far less than half of the registered students in Chemistry classes opt to use Cerego, while at Medicine nearly all students actively use it. Also, many students use the software package rather ineffective and still show cramming behavior. Hence, we are not done and there is ample room for improving the evidence base that may support a decision to implement mobile learning technology.

© Ludo Juurlink and Leiden Teachers Blog, 2022. Unauthorised use and/or duplication of this material without express and written permission from this site’s author and/or owner is strictly prohibited. Excerpts and links may be used, provided that full and clear credit is given to Ludo Juurlink and Leiden Teachers Blog with appropriate and specific direction to the original content.